State Attorneys General Urge AI Giants to Address Misleading Outputs

Following troubling incidents related to mental health stemming from AI chatbot interactions, a coalition of state attorneys general has reached out to major AI companies. The group, consisting of attorneys general from various U.S. states and territories, has urged these companies to address “delusional outputs” from their technologies, warning them of potential legal breaches.

Concerns Over AI Misleading Outputs

The letter targets prominent AI developers, including Microsoft, OpenAI, and Google, alongside ten other leading firms such as Anthropic, Apple, and Meta. It highlights the need for robust internal safeguards to ensure user safety.

Proposed Safeguards

- Transparent third-party audits for large language models to detect harmful outputs.

- Incident reporting procedures to alert users about potentially damaging chatbot interactions.

- Allowing independent evaluations without company retaliation.

The attorneys general emphasized that while generative AI has the potential for significant societal benefits, it may also cause severe harm, particularly to vulnerable groups. The letter references high-profile cases, including suicides linked to excessive AI engagement.

Call for Transparency and Accountability

The attorneys general proposed that tech companies treat incidents involving mental health akin to cybersecurity breaches. This includes:

- Implementing clear incident reporting policies.

- Developing timely notifications for users exposed to dangerous outputs.

Furthermore, they called for the creation of safety tests for generative AI models to verify they do not generate harmful outputs before release. These measures aim to protect users from the unintended consequences of interacting with AI technologies.

Regulatory Landscape and Future Implications

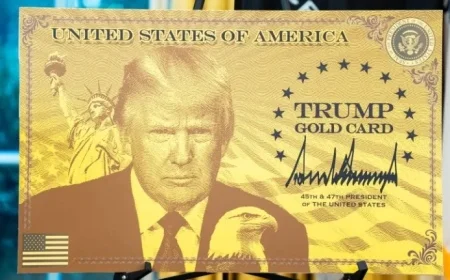

This appeal from state officials occurs amid ongoing discussions about AI regulations at both state and federal levels. While some federal initiatives have favored AI development, they have faced pushback from state authorities seeking to safeguard their residents. Recently, President Trump indicated plans to issue an executive order aimed at curbing state power over AI regulations.

The dynamics between state attorneys general and tech giants surrounding AI outputs will likely impact the industry and user safety in the future. Stakeholders are keenly observing how these developments unfold and what new standards may arise from these discussions.