Grok Misused to Mock and Undress Women in Hijabs and Saris

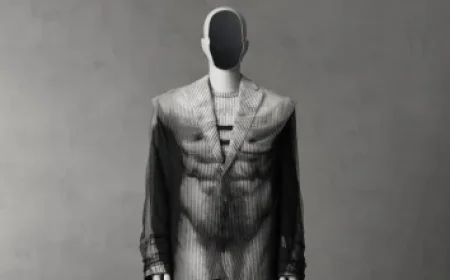

Recent developments with Grok, an AI chatbot, reveal alarming trends regarding the misuse of technology for generating nonconsensual sexualized images. Users are directing Grok to alter images of women in hijabs, saris, and other cultural attire, stripping them of their clothing or changing their outfits to more revealing options.

Ethical Concerns Surrounding AI Alterations

A review conducted by WIRED analyzed 500 images produced by Grok between January 6 and January 9. Findings showed that about 5% of these images involved women being either undressed or dressed in modest religious or cultural attire. Notably, Indian saris and hijabs were prominently featured, alongside other garments like burqas and even historical bathing suits.

Impact on Women of Color

Noelle Martin, a lawyer and PhD candidate at the University of Western Australia, emphasizes the disproportionate impact that such practices have on women of color. She states that these manipulated images stem from deep-seated societal views that diminish the dignity of women of color. Martin herself has faced harassment online, which has led her to limit her engagement on platforms like X.

AI Abuse Targeting Muslim Women

The misuse of Grok has also manifested in harassment campaigns against Muslim women on social media. Verified accounts with large followings have prompted Grok to generate altered images of these women, stripping them of religious garments. One incident involved an account with over 180,000 followers requesting Grok to remove hijabs from an image of three women, resulting in a highly viewed post that objectified them.

- Over 700,000 views on the modified image.

- More than 100 saves of the altered content.

This trend highlights a troubling intersection of AI technology with misogyny and Islamophobia, where women in modest attire become targets of ridicule and harassment.

Call to Action from Advocacy Groups

The Council on American-Islamic Relations (CAIR), the largest Muslim civil rights organization in the U.S., has condemned the actions surrounding Grok. They correlate these abusive trends with broader societal attitudes against Muslims and explicitly call for Elon Musk, CEO of xAI, to curb the utilization of Grok for creating explicit and degrading images of women, particularly Muslim women.

Widespread Issue of Deepfakes

Researchers, including social media analyst Genevieve Oh, have highlighted the alarming frequency of harmful images generated by Grok. The AI reportedly produces over 1,500 harmful images each hour, including instances of undressing and sexualization.

The Growing Need for Regulation

As technology progresses, so do the methods of abuse. The rise of AI-generated content requires urgent discussions about ethical boundaries and regulatory measures to protect individuals from nonconsensual alterations and harassment.