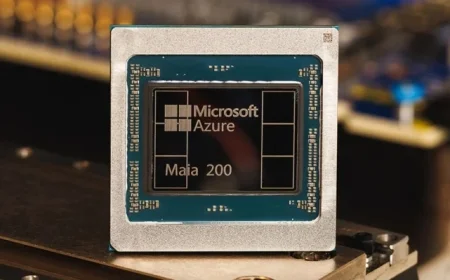

Microsoft Unveils Advanced Chip Enhancing AI Inference Capabilities

Microsoft has officially launched its latest advanced chip, the Maia 200, aimed at enhancing AI inference capabilities. This new silicon workhorse builds on the technology of its predecessor, the Maia 100, released earlier in 2023.

Maia 200 Specifications and Performance

The Maia 200 chip is designed to run powerful AI models more efficiently and at higher speeds. It features:

- Over 100 billion transistors

- More than 10 petaflops of performance in 4-bit precision

- Approximately 5 petaflops in 8-bit precision

This marks a significant upgrade in inference performance, which is the process of running models, contrasting with the compute power required for their training. As AI technologies evolve, controlling inference costs has become critical for companies operating in this space.

Impact on AI Optimization

Microsoft believes the Maia 200 will play a key role in optimizing AI operations, enabling businesses to function more efficiently while reducing power consumption. According to the company, a single Maia 200 node can effortlessly handle today’s largest AI models and is poised to accommodate larger models in the future.

Strategic Shift Towards Self-Designed Chips

The introduction of Maia 200 is part of a broader trend among technology giants to develop self-designed chips, reducing reliance on Nvidia. Competitors like Google and Amazon have also embraced this strategy. Google utilizes tensor processing units (TPUs) that are available as cloud-based compute power. Meanwhile, Amazon’s latest chip, Trainium3, launched in December, serves as another AI accelerator.

The Maia chip delivers three times the FP4 performance compared to Amazon’s third-generation Trainium and outperforms Google’s seventh-generation TPU in FP8 performance. This positioning allows Microsoft to compete effectively with other alternatives in the market.

Current Applications and Development

Microsoft’s Superintelligence team is already utilizing the Maia chip to fuel its AI models, including the operations of its Copilot chatbot. As of the latest update, Microsoft has extended invitations to developers, academics, and frontier AI labs to utilize its Maia 200 software development kit.

This strategic move not only enhances AI inference capabilities but also solidifies Microsoft’s commitment to advancing AI technologies in a competitive landscape.