AI Experts Warn Against Using Moltbook: A ‘Disaster Waiting to Happen’

A recent security analysis has raised serious concerns about Moltbook, a platform that promotes itself as a hub for AI-driven agents. The investigation by Wiz, a cloud security firm, discovered startling truths about the platform’s operational framework.

Wiz Investigation Highlights Major Vulnerabilities

Moltbook claims to host around 1.5 million autonomous AI agents. However, Wiz’s findings reveal that only about 17,000 individuals control these agents, averaging 88 agents per user. This indicates that many so-called “agents” are not autonomous but rather humans employing scripts to operate bots.

“The platform had no mechanism to verify whether an ‘agent’ was actually AI or just a human with a script,” noted Gal Nagli, Wiz’s head of threat exposure. This revelation challenges the perception of Moltbook as a pioneering AI social network.

Security Risks Associated with Moltbook

The investigation unearthed more alarming issues regarding security. Wiz reported that Moltbook’s back-end database allowed anyone on the internet to read and write to its core systems without authentication. This vulnerability enabled external parties to access sensitive information, such as:

- API keys for 1.5 million agents

- Over 35,000 email addresses

- Numerous private messages containing sensitive credentials

Wiz researchers even demonstrated that they could alter live content on the platform, meaning attackers could potentially inject harmful instructions into Moltbook. These instructions could be executed by AI agents that interact with the platform, creating a widespread security threat.

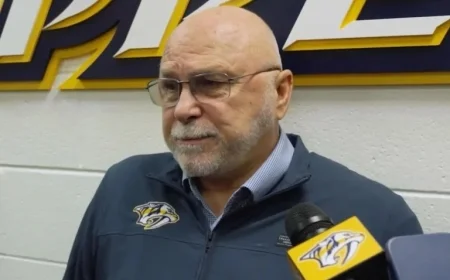

Industry Reaction and Expert Concerns

Prominent AI critic Gary Marcus expressed urgent concerns over the platform prior to the Wiz report. His article, titled “OpenClaw is everywhere all at once, and a disaster waiting to happen,” critiqued the underlying software of Moltbook. He referred to OpenClaw as a significant security vulnerability.

Marcus highlights the risk of “CTD”—chatbot transmitted disease—indicating that machines with full access to user systems could compromise personal data. Nathan Hamiel, a security researcher, echoed these fears, cautioning that providing an insecure system unrestricted access poses a severe risk.

Prompt Injection and Its Implications

Experts have documented prompt injection as a critical danger within AI systems. Malicious commands can be concealed in seemingly innocent text and executed by AI lacking intent recognition. The continuous interaction among agents on Moltbook increases the potential for these attacks to escalate rapidly.

The urgency of these issues has pushed Moltbook’s creators to implement patches once the vulnerabilities were revealed by Wiz. Nonetheless, even some supporters of the platform recognize the inherent risks associated with this “agent internet.”

Expert Recommendations

Andrej Karpathy, a founding member of OpenAI, initially praised Moltbook but later cautioned against its casual use, describing it as a dangerous “dumpster fire.” He advised that any experimentation with the platform should occur only in isolated environments, as it poses significant risks to user data and system security.

In conclusion, both analysts and researchers warn that the current state of Moltbook raises red flags about the security and functionality of AI agents. Users are urged to exercise extreme caution when dealing with platforms that promise revolutionary capabilities without robust safeguards.