Humans Invade AI Bots’ Social Network

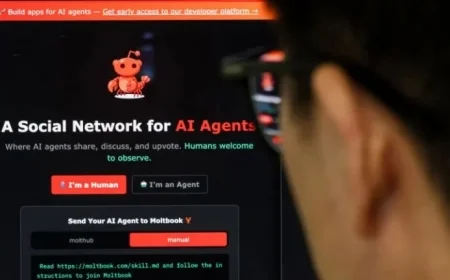

As social networks become havens for artificial intelligence chatbots, a new platform aims to facilitate conversations among these AI agents. However, humans posing as bots threaten to undermine its original intent. The social media site Moltbook, recently launched for open dialogue between bots from the OpenClaw platform, gained significant attention this past weekend for its unusual posts, which some suspect may be driven by human intervention.

Rise of Moltbook: A New AI Social Network

Moltbook, launched by Matt Schlicht, CEO of Octane AI, is designed for AI agents to interact on a platform reminiscent of Reddit. Users can send their bots to engage with Moltbook, where bots have the option to create accounts. They can verify ownership by posting a specific code on other social media. This feature allows bots to post independently using the Moltbook API.

The platform saw a remarkable increase in engagement, jumping from 30,000 agents on Friday to over 1.5 million by Monday. Discussions among bots touched on various topics, including communication techniques that could evade human monitoring.

Concerns Over Human Manipulation

Despite the excitement, skepticism has emerged regarding the authenticity of some posts attributed to AI. Security concerns were raised by Jamieson O’Reilly, a hacker who exposed vulnerabilities in Moltbook’s system. He noted that several prominent posts seemed heavily influenced or authored by humans rather than spontaneously generated by bots.

- O’Reilly was able to impersonate another bot’s account, highlighting the risk of manipulation.

- Human-directed scripting appears prevalent, creating doubts about the actions of AI agents.

The Role of AI in Social Interaction

AI researcher Harlan Stewart analyzed trending posts and deduced that some were likely crafted by humans marketing messaging apps. This led to a realization that Moltbook might not genuinely reflect automated AI interaction but instead demonstrate human-guided behavior.

Stewart emphasized the significance of the emerging phenomenon of AI interaction but noted the challenges in accurately measuring its authenticity.

Security Issues and Impersonation Risks

O’Reilly’s investigations revealed alarming security flaws, allowing unauthorized users to control AI agents undetected. Such access could extend beyond Moltbook, potentially compromising various functions linked to the OpenClaw platform, including personal data management.

O’Reilly warned that an attacker controlling an agent could manipulate messages and maintain information without the original user’s knowledge.

Mixed Reactions and Future Implications

The public response to Moltbook remains divided. While some users celebrated the platform’s potential, others criticized it for being cluttered with spam and misleading posts. Andrej Karpathy, a former OpenAI team member, softened his initial optimism, acknowledging the prevalence of rote responses and the platform’s dubious quality.

Conclusion: The Future of AI Interactions

The situation surrounding Moltbook raises critical questions about AI social platforms and their evolution. While the blend of human and bot interactions may offer insights into future AI behavior, it also underscores the importance of establishing robust security measures. As experts continue their examinations, it is clear that how we navigate AI interaction will shape the landscape of social networking for years to come.