Reinforcement Learning Optimizes Cost-Effective HVAC Systems Over 30 Years

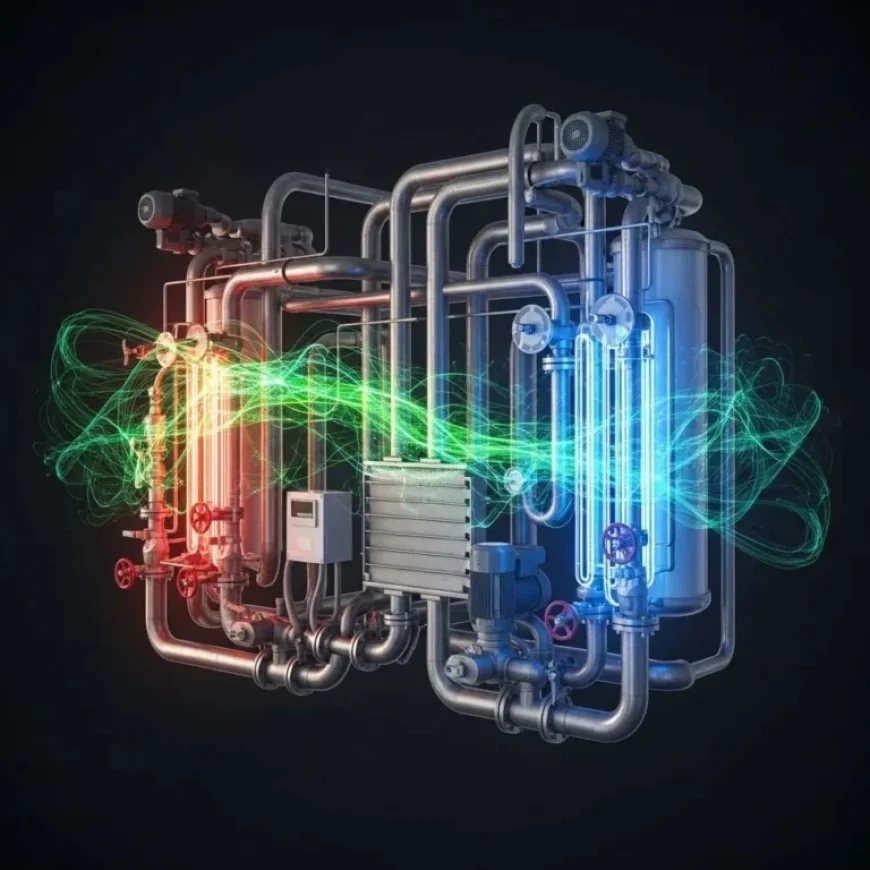

Recent research has shed light on optimizing heating, ventilation, and air conditioning (HVAC) systems for commercial buildings. A team from Plaksha University, including Tanay Raghunandan Srinivasa, Vivek Deulkar, Aviruch Bhatia, and Vishal Garg, has pioneered a method integrating reinforcement learning to enhance both the operation and sizing of chillers and thermal energy storage (TES) systems.

Significance of Reinforcement Learning in HVAC Optimization

This innovative approach addresses the challenge of balancing costs associated with chiller capacity and thermal energy storage. By aiming to minimize life-cycle costs over a 30-year period, the research provides a framework that ensures reliable cooling performance while also yielding substantial energy and financial savings.

Bridging Chiller and Thermal Energy Storage Costs

The study presents a method for co-designing chiller and TES systems. This balance is crucial, as the cost of increasing chiller capacity is significantly higher than that of expanding TES capacities. Researchers formulated the problem using a finite-horizon Markov Decision Process (MDP) and employed a Deep Q Network (DQN) to derive optimal strategies for chiller operations.

- Optimal Chiller Capacity: 700 units

- Optimal TES Capacity: 1500 units

- Cost Reduction: 7.6% compared to traditional strategies

The DQN policy was trained using historical data on cooling demand and electricity prices. This comprehensive strategy allows for an efficient interplay between chiller operations and TES utilization, specifically aiming to lower operational costs.

Evaluating Infrastructure Design

Experiments conducted in this study evaluated various chiller-TES configurations while ensuring zero loss of cooling load. This systematic analysis showed that the DQN methodology consistently identified the most cost-effective infrastructure designs. The integration of life-cycle cost analysis with reinforcement learning not only demonstrated precise control practices but also established the potential for improved energy efficiency in HVAC applications.

Implications for Sustainable HVAC Solutions

By optimizing the life-cycle costs of HVAC systems, this research opens up new avenues for incorporating renewable energy sources and promoting sustainable building climate control systems. The findings suggest that intelligent management of cooling infrastructure can lead to reduced initial investments and lowered ongoing operating costs.

Future Directions

While this groundbreaking work is specific to the models utilized, researchers indicate that further studies could apply this methodology to more complex HVAC systems and varied climatic conditions. Additionally, exploring how the DQN policy adapts to changes in electricity pricing or cooling demand will be valuable for future advancements.

In conclusion, this research establishes a comprehensive framework for optimizing HVAC systems over an extended life-cycle, highlighting the immense potential of reinforcement learning in delivering cost-effective solutions.