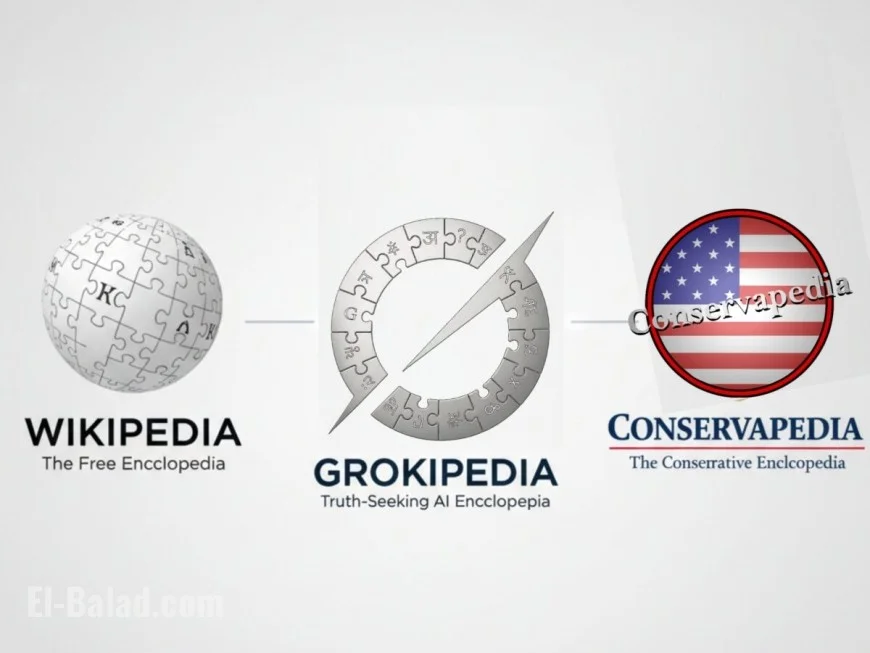

Grokipedia v0.1 quietly goes live: Musk’s AI encyclopedia launches with a smaller corpus and big ambitions

Grokipedia—an AI-written, wiki-style encyclopedia built by xAI—went live in an early v0.1 release late Monday, October 27. Styled like a traditional wiki with a search-first homepage and article layout, the site positions itself as a “truth-seeking” alternative to legacy encyclopedias while leaning on xAI’s Grok model and activity on X to keep entries fresh. At launch, Grokipedia surfaced roughly 885,000 articles, far fewer than the millions found on established platforms, but enough to give users a sense of scope and tone.

What launched with Grokipedia today

Early visitors encountered a minimal interface—logo, search bar, and browsable pages spanning tech, entertainment, sports, and current affairs. The build carries clear “beta” fingerprints: uneven topic depth, occasional formatting quirks, and a limited set of cross-links. Still, core functions are in place:

-

AI-drafted entries that read like concise overviews with definitions, key facts, and short background sections.

-

Conversational assist via embedded Grok prompts that propose follow-ups (“explain like I’m 12,” “compare with…,” “timeline”).

-

Rapid refresh on time-sensitive topics, ostensibly by ingesting public posts and newsy chatter from X alongside open datasets.

The project arrives after a stop-start October in which xAI signaled a short delay to “purge propaganda” from early builds before flipping the switch on a smaller-scope debut.

How Grokipedia says it will differ from Wikipedia

Grokipedia’s pitch is less about copying a crowd-sourced encyclopedia and more about AI-first drafting with human oversight:

-

Model-led summaries: Entries are generated and regularly re-generated by Grok, with citations and revision notes promised as the system matures.

-

Source scoring: The roadmap emphasizes tagging claims with confidence levels and provenance so readers can see why the model believes a statement.

-

Guardrails on contentious topics: Politically charged pages are expected to route through heightened review—both algorithmic and human—before updates go live.

-

Tighter edit gates: Rather than open edit access to anyone immediately, Grokipedia plans a tiered contributor system to limit vandalism and speed moderation.

Early critiques: size, slant, and safety

Three pressure points emerged within hours of the soft launch:

-

Scope deficit: With under a million pages visible, coverage gaps are easy to find, especially on niche science, non-English history, and regional culture. The team frames this as a deliberate “start smaller, improve fast” approach.

-

Framing and bias: Sample pages in sensitive domains (gender, geopolitics, public health) show firmer, sometimes binary framing than readers might expect from consensus-driven reference works. Supporters call it clarity; critics call it slant.

-

Reliability and harm: Any system that leans on real-time social streams risks amplifying rumors or offensive content if filters fail. Grok has had headline-grabbing stumbles before; Grokipedia’s long-term credibility will hinge on catching those errors before they hit article pages.

Under the hood: what the v0.1 build implies

While xAI hasn’t published a full technical white paper, the behavior of the site and prior disclosures point to a familiar AI-reference stack:

-

Retrieval-augmented generation (RAG): Articles are drafted with model outputs anchored to a live corpus that blends open datasets, licensed archives, and public posts.

-

Continuous re-write loop: Fresh signals trigger partial rewrites rather than long talk pages, aiming for fast correction cycles.

-

Telemetry for trust: Expect visible “last updated” stamps, change logs, and eventually per-claim attributions that can be audited.

If executed well, this design could make Grokipedia fast on breaking topics and modular for rollback when things go wrong. The tradeoff is cultural: less open deliberation, more model-mediated updates.

How to read Grokipedia right now

-

Treat it as a beta: Use it for quick primers, not specialized research. Cross-check anything sensitive or high-stakes.

-

Watch the metadata: Update times, revision notes, and any visible source markers are your best guide to freshness and confidence.

-

Compare tone across pages: Differences in definition lines and section headings often reveal editorial stance; reading two or three related entries helps spot slant.

-

File feedback early: The project’s value will track with how quickly it absorbs user corrections and expert input.

What’s next on the roadmap

The near-term milestones to watch:

-

Citation transparency: Moving from generic “sources available” language to in-page, per-claim attributions.

-

Contributor tiers: Opening verified editor programs in science, history, health, and law to stress-test human–AI collaboration.

-

Internationalization: Adding parallel pages in major languages and ensuring cultural coverage that isn’t overly U.S.-centric.

-

Quality dashboards: Public metrics on correction speed, error rates, and model confidence would go a long way toward trust.

Grokipedia is live in a limited, work-in-progress form—smaller than legacy encyclopedias but anchored to an AI system that can rewrite fast and pull from a broader live signal. That’s both its promise and its peril. If xAI can pair speed with transparent sourcing and responsible guardrails, Grokipedia could become a useful quick-read reference on dynamic topics. If not, the platform will struggle to escape the twin criticisms already forming on day one: too small and too slanted.