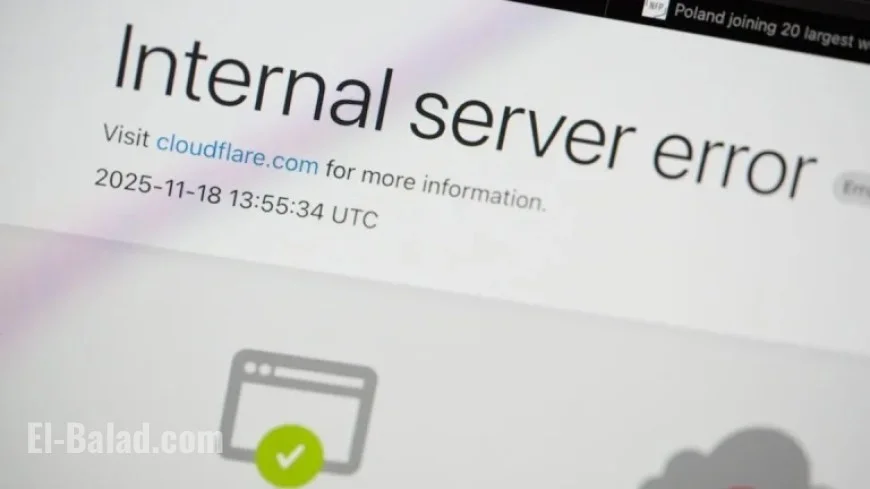

Cloudflare Outage Triggered by Unexpected File Size Doubling

Cloudflare recently experienced its most significant outage since 2019, triggered by an unexpected doubling of file size in its proxy service. The system encountered an overwhelming number of errors due to the propagation of a faulty configuration file.

Details of the Cloudflare Outage

The incident stemmed from the bot management system at Cloudflare, which has a limit of 200 machine learning features utilized at runtime. However, a problematic file exceeding this limit was uploaded, causing the system to panic and generate numerous 5xx error HTTP status codes.

Normally, these error codes are rare in Cloudflare’s network. The sudden flux in 5xx errors indicated a critical failure within the system, attributed to the loading of an incorrect feature file. Cloudflare’s systems displayed unusual behavior, fluctuating between operational recovery and failure.

Root Cause Analysis

The problematic file was updated every five minutes by queries from a ClickHouse database cluster, which aimed to enhance permissions management. However, bad data resulted only when the query was run on an updated part of the cluster. Consequently, this led to either a correct or faulty configuration file being generated and rapidly distributed across the network.

- The bad configuration file caused temporary belief among staff that an attack might be underway.

- Ultimately, every ClickHouse node created the erroneous configuration, resulting in a stable yet failing state within the system.

Resolution and Future Prevention

To resolve the issue, Cloudflare halted the generation of the faulty file and manually inserted a reliable file into the distribution queue. A core proxy restart followed to restore normal operations. The team worked diligently to reboot other services affected by the error.

In light of this outage, Cloudflare is implementing measures to fortify its system against similar incidents in the future. Actions include:

- Improving the ingestion processes for configuration files generated by Cloudflare.

- Establishing more global kill switches for features.

- Preventing core dumps from overwhelming system resources.

- Reviewing all core proxy modules for potential error conditions.

While David Prince of Cloudflare acknowledges that they cannot guarantee that outages of this magnitude won’t occur again, he stresses that each past incident has resulted in more resilient systems. Cloudflare remains committed to improving its infrastructure to better serve its users and prevent similar disruptions.