Celestial AI to Be Acquired by Marvell: Why Optical I/O Is Now the Hottest Battleground in AI Data Centers

Marvell has struck a definitive deal to acquire Celestial AI, the optical-interconnect startup behind the Photonic Fabric™ platform that links chips, memory, and systems with light instead of copper. The transaction, announced Tuesday, sets an upfront value of about $3.25 billion in cash and stock, with performance-based earn-outs that could lift the total to as much as the mid-$5 billion range. Closing is targeted for early 2026, pending approvals and customary conditions.

Deal terms at a glance

-

Buyer: Marvell Technology

-

Target: Celestial AI (Photonic Fabric optical I/O and chiplet interconnect)

-

Headline value: ~$3.25B upfront; additional milestone payments possible

-

Timing: Expected to close Q1 2026 (subject to regulatory review)

-

Strategic fit: Marvell’s data-infrastructure portfolio—custom silicon, switching, optical modules—plus Celestial’s optical I/O for “scale-up” AI systems

What Celestial AI actually does

Celestial AI builds optical I/O chiplets and fabrics that move data between accelerators, CPUs, and high-bandwidth memory using photons rather than electrons. Branded Photonic Fabric, the technology targets three bottlenecks that define modern AI clusters:

-

Bandwidth: Optical links deliver far higher throughput than short-reach copper traces at advanced nodes.

-

Reach and topology: Light can span package, board, and rack distances with less signal loss, allowing new “pooled memory” and tightly coupled XPU designs.

-

Power: Lower per-bit energy than electrical SerDes helps curb the ballooning wattage of AI training and inference.

In practical terms, Photonic Fabric chiplets can be co-packaged with custom accelerators and scale-up switches, creating a low-latency fabric for model-parallel and memory-bound workloads. That unlocks designs where multiple compute dies share memory resources with near-on-package efficiency—critical as parameter counts and context windows surge.

Why Marvell wants Celestial AI now

AI compute has two complementary networking needs:

-

Scale-out: Ethernet/InfiniBand/Switch silicon that connects many servers across a cluster.

-

Scale-up: Ultra-dense, low-latency links that bind chips and memory within a server, a tray, or a rack as if they were one giant processor.

Marvell already plays heavily in scale-out with switching and optical modules. Celestial AI fills the scale-up gap, giving Marvell a top-to-bottom story from on-package photonics to rack-level interconnect. The combined roadmap aims to:

-

Enable co-packaged optical with custom XPUs at hyperscalers.

-

Offer optical memory pooling so accelerators aren’t stranded waiting on HBM.

-

Reduce interconnect power—a rising share of system energy—as models grow.

Where this lands in the competitive landscape

-

NVIDIA: NVLink/NVLink-C2C and high-radix switches dominate many AI pods today; optical NVLink variants are on long-term roadmaps.

-

Broadcom & others: Ethernet switching and optical pluggables continue to scale; co-packaged optics efforts are underway across the ecosystem.

-

AMD & custom silicon efforts: Advanced packaging and chiplet fabrics aim to tighten compute-memory coupling; optical I/O provides another speed-and-reach lever.

By absorbing Celestial AI, Marvell positions itself as a neutral interconnect supplier that can co-design with any custom XPU program, not just a single accelerator vendor.

What hyperscalers get out of this

Large cloud providers have been testing optical I/O for next-gen racks that behave like a single, memory-rich “super-socket.” The appeal:

-

Bigger, faster model shards: Lower latency between chips lets teams scale parameter counts without brutal efficiency penalties.

-

Higher utilization: Pooled memory reduces headroom waste, improving TCO.

-

Thermals and power: Moving high-speed I/O to light helps data centers stay within cooling envelopes.

With the deal, Marvell is signaling it will productize those proofs of concept—turning lab deployments into volume platforms tied to custom silicon roadmaps.

What to watch between now and close

-

Co-packaged demos: Expect staged unveilings of XPU + Photonic Fabric prototypes and reference systems.

-

Ecosystem reveals: Memory, packaging, and module partners will matter—especially for HBM capacity and optical connectorization inside a chassis.

-

Standardization vs. lock-in: Buyers will look for interoperable optics and fabrics to avoid single-vendor traps.

-

Regulatory timeline: The early-2026 close target implies a straightforward review, but AI-infrastructure consolidation is under the microscope globally.

Context: Celestial AI’s rise

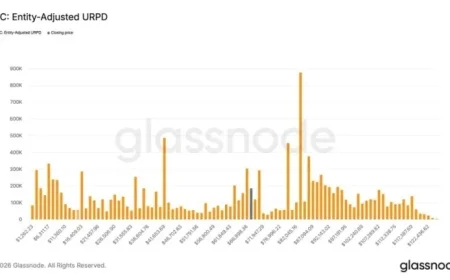

Founded to commercialize optical I/O at system scale, Celestial AI spent the past two years moving from concept silicon to customer pilots while raising just over $500 million across multiple rounds, including a large Series C1 in 2025. Those funds underwrote the packaging, photonics, and software needed to make optical I/O a practical building block—not merely a research milestone.

With Celestial AI, Marvell is betting that scale-up optical interconnect becomes as indispensable to AI systems as scale-out switching already is. If the integration stays on schedule and hyperscaler designs lock in, 2026 could be the year optical I/O steps out of the lab and into volume racks—reshaping how the industry builds memory-rich, power-aware AI computers.